Civil Engineering Firm Requires LLM Aggregator

A national civil engineering firm frequently develops highly-detailed technical specifications for large infrastructure projects. With the recent evolution of Large Language Models (LLMs) such as OpenAI, Anthropic, Grok and Cohere, the firm saw an opportunity to accelerate proposal and spec development. However, they faced a challenge: how to compare the output quality, reliability, and compliance of different LLM frameworks, side by side, for mission-critical documents.

Our vision was to design an intuitive platform that empowers engineers and project leads to input project requirements once, receive draft specifications from multiple LLMs, and easily benchmark their performance for quality, compliance, and technical accuracy.

Problem Statement

The civil engineering firm needed a reliable, user-friendly solution to compare outputs from multiple Large Language Model (LLM) frameworks when drafting complex project specifications. Existing workflows made it difficult to efficiently evaluate the quality, compliance, and accuracy of AI-generated content, risking errors and project delays. The firm required an aggregator platform that would streamline this comparison process, ensure transparency, and support rigorous technical and regulatory standards.

Industry

B2B SaaS AI

What We Did

Architecture

1 Dedicated AI Solutions Architect

User Journeys

1 Dedicated UX Resource

User Personas

1 Dedicated UX Resource

Implementation

1 Dedicated AI Integration Specialist

Quality Assurance

1 Part-time QA Resource

Strategy and Approach

We partnered with project managers, specification writers, and senior engineers to deeply understand their document development process, regulatory needs, and daily workflow. Key insights included:

Accuracy and Compliance: Specs needed to meet stringent government guidelines and industry standards; even small model hallucinations could jeopardize bids.

Need for Comparative Review: Engineers wanted to see results from multiple LLMs together, facilitating an efficient selection of the best draft.

Transparency & Audit Trail: All model prompts, settings, and outputs had to be logged and traceable.

User-Friendly, Not Technical: Users desired a simple interface—no prompt engineering or code required.

Outcome

We delivered an LLM Aggregator Platform tailored for civil engineering project specification development, including:

Feature Highlights

Unified Input Dashboard: Users input project criteria once. The platform automatically queries selected LLMs via API.

Side-by-Side Result Viewer: Draft specs from each LLM are displayed in a synchronized, tabbed view, which appear side-by-side for comparative purposes

Audit Logging & Export: Every prompt, response, and rating is logged. Full export to Excel/PDF for compliance review or documentation.

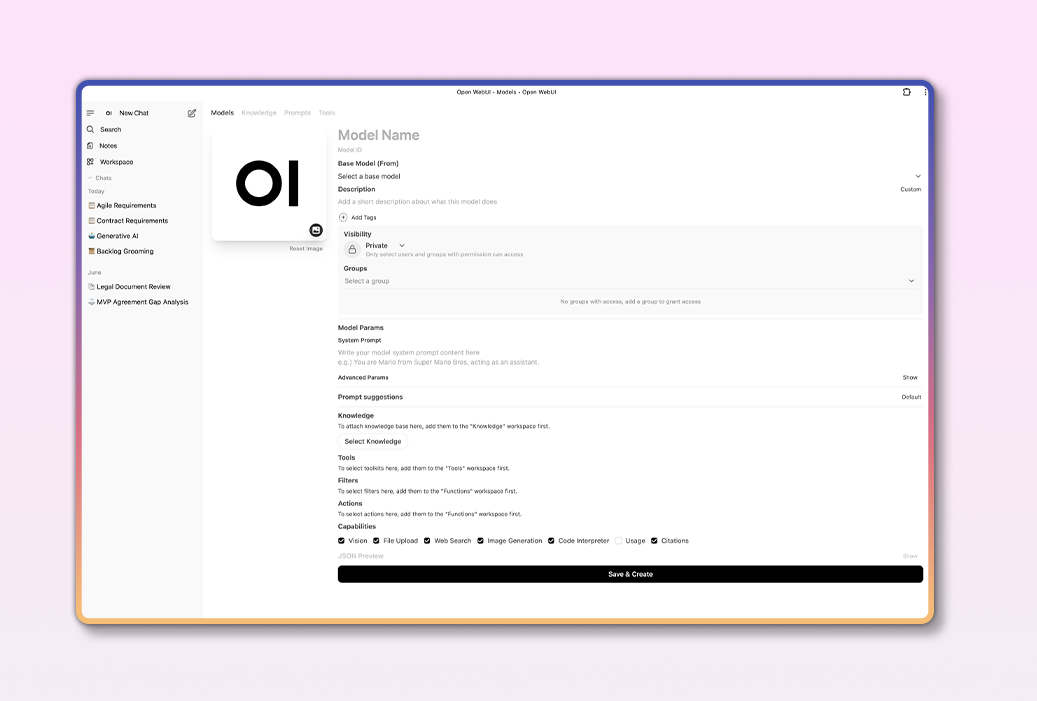

GPT and Workspace: The platform allows users to create their own custom GPTs with any LLM the system integrates with.

Get in Contact.

Start your project with AZRA today.